What Aspects to Consider When Creating Kafka

Creating Kafka Topics based on requirements

Introduction

Apache Kafka is a distributed streaming platform designed to handle large amounts of data in real-time. It allows for the processing and storage of high volumes of data from multiple sources in real-time, making it a crucial tool for companies that need to handle large data streams.

This post dives deep into the art of crafting topics that not only move data, but do so with an efficiency and resilience that aligns seamlessly with your application’s demands. We’ll explore the intricate dance between diverse requirements and optimal configurations, considering factors like:

Data Type

- Text logs, sensor readings, stock updates – understanding the type of data shapes the ideal topic structure. Read/Write Frequency: A torrent of tweets or a trickle of financial transactions? Matching access patterns to partition choices maximizes performance.

Retention

- How long should your data linger in the Kafka river? Retention policies ensure information availability while balancing storage considerations.

Durability

- Is data loss an unforgivable sin, or can an occasional hiccup be tolerated? Replication factors and durability settings provide the necessary safety net. Number of Partitions: Splitting your data stream into multiple channels can boost throughput, but finding the sweet spot is crucial.

Replication Factor

- Spreading data across multiple replicas enhances fault tolerance, but comes at a cost in terms of storage and performance.

Message Size

- Don’t let jumbo messages clog the pipes! Understanding average message size guides compaction strategies and prevents bottlenecks.

Compression

- Squeeze the most out of your storage and bandwidth with effective compression techniques.

Before deep dive in the topic creation details let’s detail some of componenentes we will take in consideration.

https://chat.openai.com/share/46c1d6be-68dd-4203-8e43-af1e14f72c14

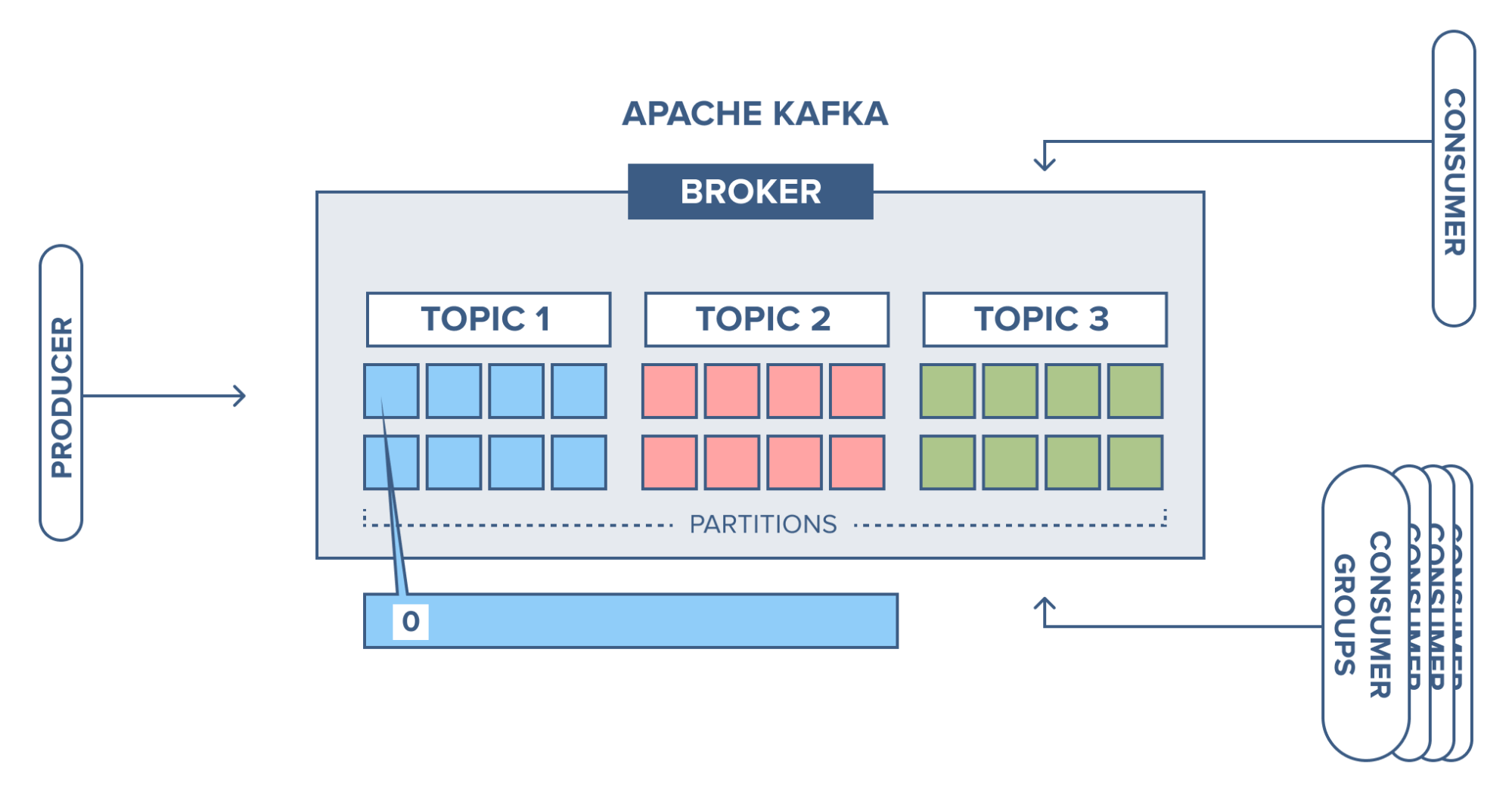

Kafka Broker

A broker is a server that manages and stores Kafka messages. Brokers are responsible for maintaining partition replicas, managing consumer offsets, and ensuring that messages are distributed across partitions.

Kafka Topic

A topic is a category or feed name to which messages are published by a producer. It is a way to categorize and organize data in Kafka. Consumers can subscribe to one or more topics to receive messages.

Kafka Partition

A partition is a portion of a topic’s data stored on a single broker. Kafka partitions allow for scalability and parallel processing of data. Each partition is ordered and has a unique sequence number, allowing consumers to read messages in the order they were produced.

Kafka Producer

A producer is an application or process that writes data to Kafka topics. Producers are responsible for sending messages to Kafka brokers for storage and replication.

Kafka Consumer Group

A consumer group is a set of consumers that work together to consume and process data from one or more topics. Consumers within a group work together to balance the workload and ensure that each message is only consumed by one consumer in the group.

Kafka reliability and fault-tolerance

Kafka ensures reliability and fault-tolerance through replication. Each partition has multiple replicas, allowing for data to be replicated across multiple brokers. If one broker fails, another broker can take over without losing data.

Kafka data retention

Kafka uses a retention policy to control how long messages are kept in the system. Kafka can either retain messages based on time or based on the size of the data stored.

Mastering Kafka Topic Creation

In the dynamic landscape of event streaming, Apache Kafka has emerged as a robust and versatile solution. At the heart of Kafka’s architecture lies the concept of topics, shaping how data is organized, processed, and distributed. In this comprehensive guide, we will explore the intricacies of creating Kafka topics, considering a myriad of application requirements.

Understanding the parameter num.partitions in Apache Kafka

The num.partitions parameter in Apache Kafka is a crucial setting that determines the number of partitions a topic should have. Partitions serve as the fundamental unit for parallelism and scalability within Kafka, enabling concurrent processing by multiple consumers.

Key Aspects:

-

Parallelism:

- The number of partitions directly influences the level of parallelism in Kafka. More partitions allow for concurrent processing, enabling multiple consumers to work simultaneously.

-

Scalability:

- Partitions are distributed across Kafka brokers. With multiple brokers, Kafka can distribute partitions, enhancing the system’s scalability.

-

Load Balancing:

- Consumers subscribe to specific partitions. The number of partitions contributes to load balancing, distributing the load evenly among consumers.

-

Fault Tolerance:

- Replication is at the partition level. Each partition has a leader and multiple replicas, contributing to better fault tolerance if a broker goes down.

Considerations:

-

Choose Carefully:

- The number of partitions should be chosen based on the specific use case, considering factors such as expected throughput, the number of consumers, and desired parallelism.

-

Planning is Key:

- It’s crucial to plan the

num.partitionsparameter during the initial topic setup, as changing the number of partitions for an existing topic can be challenging.

- It’s crucial to plan the

This parameter plays a pivotal role in shaping the behavior of Kafka topics, impacting parallelism, scalability, load balancing, and fault tolerance.

1. Single Partition vs. Multiple Partitions

In Kafka, the choice between a single partition and multiple partitions for a topic depends on the application’s requirements.

Single Partition

Ensures sequential processing, simpler management, suitable for scenarios where parallelism is not a priority.

- When to use:

- Ideal for low-throughput scenarios or when ordering of messages is critical.

Multiple Partitions

Enables concurrent message processing by multiple consumers, enhances system scalability, and provides fault tolerance through partition replication.

- When to use:

- High-throughput scenarios where parallelism is essential.

- Use cases where scalability and fault tolerance are critical.

Choosing the Right Number of Partitions

2. Throughput and Volume Considerations

High Throughput and Volume

- Example:

- Financial trading platforms processing thousands of transactions per second.

- Configuration:

- Multiple partitions for parallelism.

- Adequate resource allocation to handle the high volume.

Low Throughput and Volume

- Example:

- Small-scale internal applications with occasional data updates.

- Configuration:

- Single partition to simplify management.

3. Data Type

- Example:

- Storing JSON data from user interactions.

- Configuration:

- Avro or Protobuf serialization for efficiency.

4. Read/Write Frequency

High Read Frequency

- Example:

- Real-time analytics dashboard.

- Configuration:

- Multiple partitions for parallel consumption.

High Write Frequency

- Example:

- IoT devices sending constant updates.

- Configuration:

- Multiple partitions to distribute the write load.

5. Retention, Durability, and Message Size

Long Retention and Durability

- Example:

- Compliance logs that must be retained for several years.

- Configuration:

- Adjust retention period accordingly.

Short Retention and Durability

- Example:

- Real-time monitoring data.

- Configuration:

- Short retention periods to minimize storage requirements.

Varied Message Size

- Example:

- Social media posts with images.

- Configuration:

- Optimize compression settings for varying message sizes.

6. Number of Partitions and Replication Factor

- Example:

- E-commerce platform handling varied workloads.

- Configuration:

- Tune the number of partitions based on expected load.

- Adjust replication factor for fault tolerance.

7. Compression

- Example:

- Log data with repetitive patterns.

- Configuration:

- Apply compression to minimize network and storage overhead.

References

- https://dzone.com/refcardz/apache-kafka-patterns-and-anti-patterns

- https://www.confluent.io/resources/kafka-the-definitive-guide/?utm_medium=sem&utm_source=google&utm_campaign=ch.sem_br.nonbrand_tp.prs_tgt.content-search_mt.xct_rgn.latam_lng.eng_dv.all_con.best-kafka-books&utm_term=o%27reilly%20kafka&creative=&device=c&placement=&gad_source=1&gclid=CjwKCAiAzJOtBhALEiwAtwj8tnYal1TJXsCKR5iGF-e5U0J5-WxfrPA1tSkXFrHG-go9mwvyxsOtiRoChvEQAvD_BwE

Conclusion

Creating Kafka topics is both a science and an art, requiring a nuanced understanding of your application’s unique requirements. Whether dealing with high or low throughput, varied message sizes, or diverse data types, Kafka’s flexibility allows you to tailor topics to your needs. By carefully considering factors such as partitioning, replication, and compression, you can optimize Kafka topics to serve as the backbone of a resilient and efficient event streaming architecture.